Common Pitfalls in AI-Generated Content: What to Watch Out For

Key Takeaways

- AI-generated content can lead to misinformation as seen in instances where fabricated commands were shared, showcasing the risks of relying on AI-generated content without supervision.

- The article emphasizes the importance of human oversight in preventing common pitfalls with AI-generated content, highlighting the potential problems that arise when companies prioritize quantity over quality in content creation.

- Understanding the impact of AI-generated content on SEO, brand perception, and customer conversion can help businesses make informed decisions when utilizing AI platforms like ChatGPT, Claude, Copilot, xAI, and Gemini.

AI-generated content is everywhere. But aside from the common problems of overused continuous tense, robotic writing tone, and repetitive phrases, there are bigger issues.

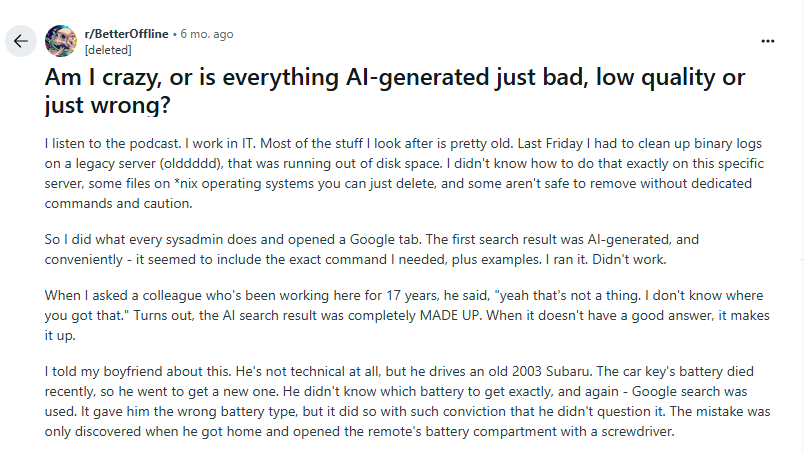

For example, this Redditor, a system admin, needed a command to clean binary logs on a client’s legacy server. As anyone would do, she typed the query on her web browser and clicked on the top result. In it, she found a command she could run to clean these binary logs, but it didn’t work.

She had to ask her senior for help. Which was when she realized that the command in that blog post doesn’t exist, and was probably fabricated by AI (because the content was AI-generated).

Her boyfriend also needed a car battery for an old Subar,u and a top Google result misled them into getting another car battery type:

These are just a few instances of the common pitfalls in AI-generated content, especially when they’re published without human supervision.

In this article, I’ll explain other problems with AI-generated content. I’ll also hint at where companies make mistakes when they use AI to write and optimize for AI-visibility.

8 common pitfalls with AI-generated content

For context, AI-generated content is content written partially or wholly with the help of AI chatbots. These include ChatGPT, Claude, Copilot, xAI, Gemini, and any other AI platform.

They’re content produced to be commercially consumed, and can have an effect on your SEO, brand, and potential to convert a reader to a customer. The common problems with this type of content include:

1. Companies prioritize TOFU over BOFU content:

TOFU content (top-of-the-funnel content) is content that targets informational topics on your blog. This is content that basically answers top industry questions and usually has no direct relevance to your product or service.

For example, you sell fashion eyewear and published an article about “What are the benefits of direct-to-consumer eyewear brands?”

The traffic from a topic like this has no relevance to your product.

This is because the readers aren’t looking to buy eyeglasses. They want to know the market value of selling eyewear direct-to-consumer and should be in an academic journal instead.

So, the traffic you get from such a topic isn’t high-quality traffic. But it will give you the illusion that your SEO is working even if there is no revenue (or reasonable leads) to back it up.

BOFU content (bottom-of-the-funnel content), on the other hand, focuses on content that has resonance with your product or business.

For example, the eyewear brand publishes an article on “What are the best sources for fashionable sunglasses under $100?” This type of topic gets them in front of actual customers who need different fashionable sunglasses.

But the common pitfall with AI-generated content, in this context, is that some marketers and companies believe TOFU content is the type of content to pursue. And AI visibility tracking tools in SEMrush and Ahrefs don’t help.

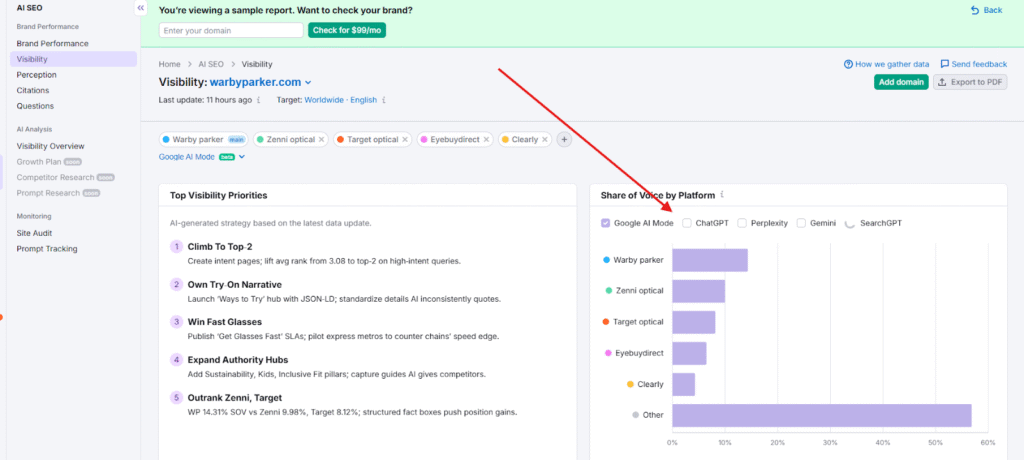

For example, in SEMrush’s AI SEO feature for this example company, Warby Parker, it shows the share of voice of its competitors, even though SOV is not really a relevant SEO metric anymore. Companies who don’t know will worry about their declining SOV.

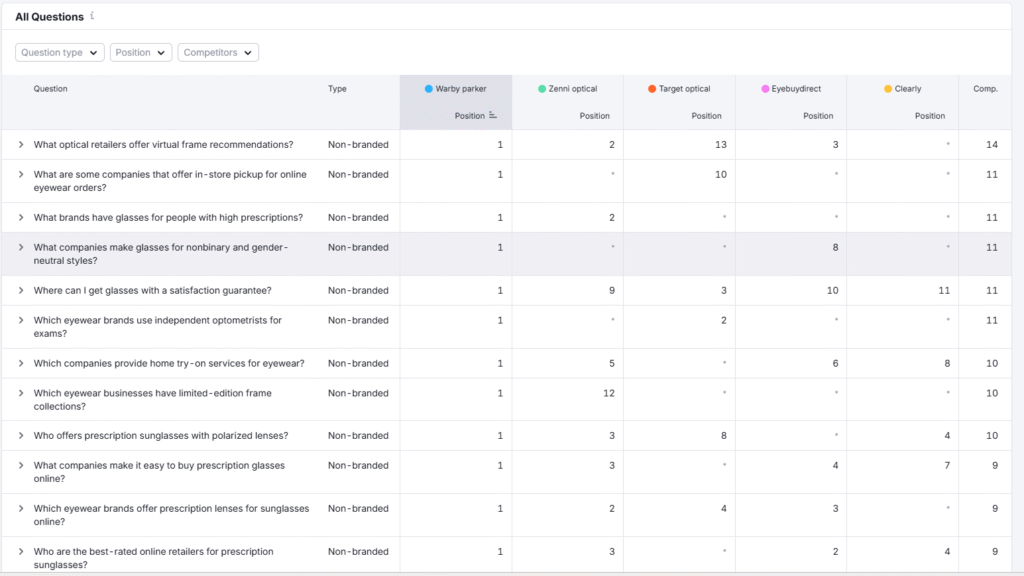

It then went on to show summaries of questions people ask on AI chatbots, which aren’t bad suggestions:

The problem with these suggestions is that some companies may want to optimize their content for all these queries. And by doing so, they create irrelevant content with low-quality traffic value.

This is because the organic traffic from the TOFU keywords is not valuable from a conversion perspective. And they’ve just wasted time writing and optimizing articles that have no correlation with their business/product.

In the end, they show up for keywords and prompts with no buying intent, and this is something we see since AI chatbots rose in popularity.

2. AI writing tools use repetitive language/diction

Have you ever read an article, and two or three simultaneous sentences mean the same thing?

There is a chance that it was generated by AI.

While this isn’t valid in all cases, (as “newbie writers” may also explain a concept in three different ways), it is common in AI content.

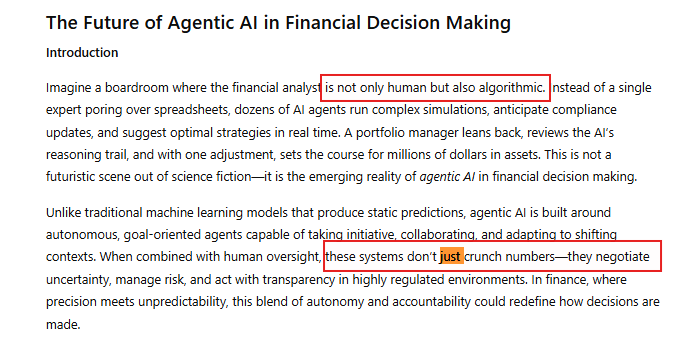

Another instance of repetition is when chatbots like ChatGPT write like this:

“Imagine a boardroom where the financial analyst is not only human but also algorithmic.” Or “these systems don’t just crunch numbers—they negotiate uncertainty, manage risk,…”

These are vague, unhelpful phrases that humans wouldn’t use. And when they do, the article isn’t spammed with them.

Ludwig Yeetgenstein, who writes about LLMs and neural networks, wrote his frustration on X.com:

He explains that the repetitive hiccup is a problem with LLM post-training. And for readers of your blog, the repetitions like this (which are often unedited in the published version of AI-generated content) affect the flow of such content. It can introduce mental exhaustion, and also makes it easy to spot AI-generated content, which may introduce some bias in reader trust.

In other words, readers will think you’re not creative because your content has the pattern of AI chatbots. And you don’t want your readers (who you hope to become customers) to think that of you.

3. Publishing AI-generated content without any edits

I’ve hinted at this already. There are many marketers and companies who use AI to generate and publish content at scale without human oversight.

In the short term, it will affect the reputation of your website as AI-generated content (sometimes) isn’t helpful content.

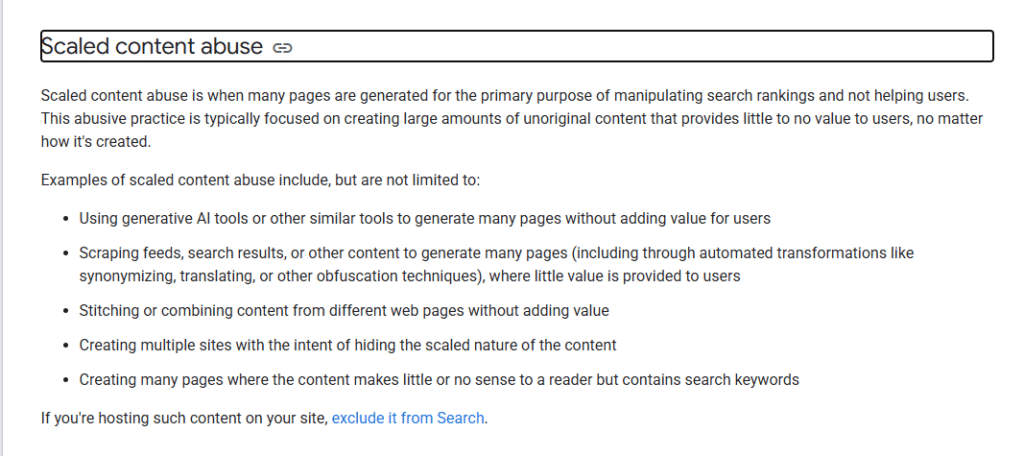

In the long term, Google and other search engines can penalize your website (with reduced organic traffic), especially if the AI-generated pages are deemed unhelpful.

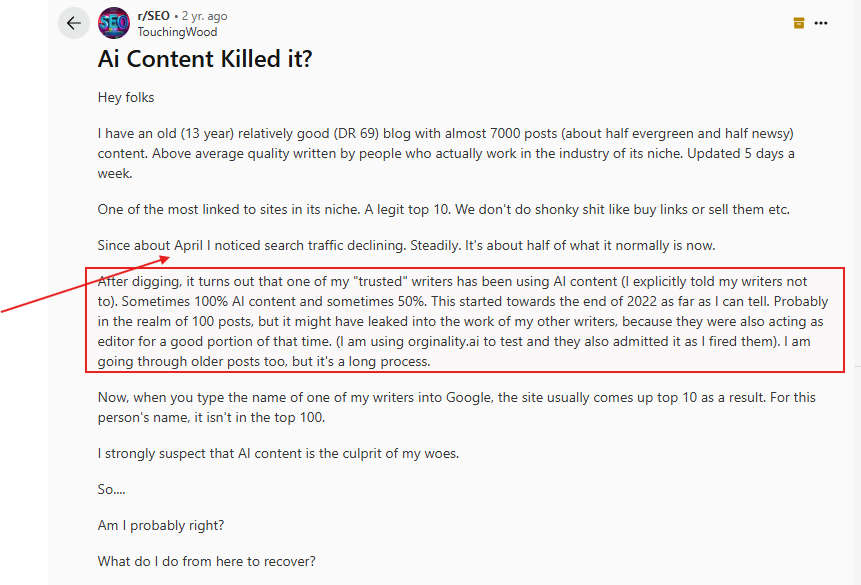

This happened to a Redditor who has a site with over 7K blog posts and a DR of 69:

Google, as part of its rating guidelines, has said that automated or AI-generated content may now earn the lowest rating. So, if your content is chiefly AI-generated, your website may be deemed less helpful and relevant to the search query.

And over time, this may affect your rankings and performance on the search engine ranking pages (SERPs).

4. Factual errors

Many AI chatbots provide incorrect or outdated information in their responses. And if you don’t know about your industry or what you mean to write about, you’ll promote errors with your blog posts.

I already mentioned the system admin who wanted to run a command to clean a binary log on a legacy server, and the first blog post, AI-generated, gave her incorrect commands.

There are several instances like this.

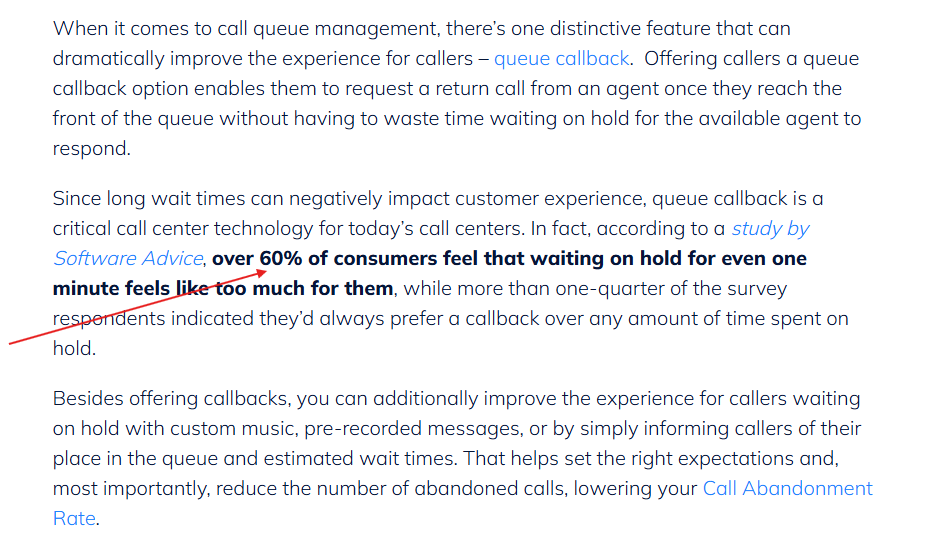

For example, in an article about “call center technology”, this writer referenced a survey by Software Advice that is outdated and probably didn’t even exist:

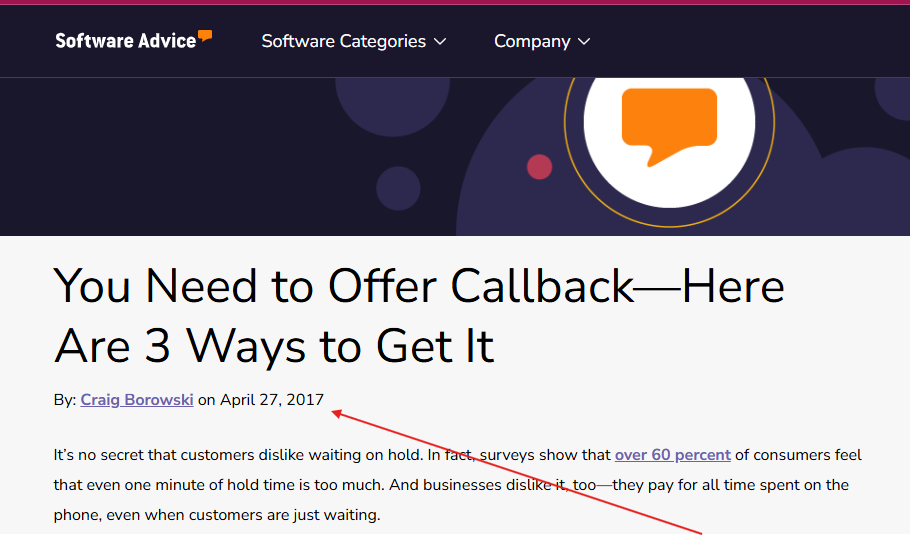

When I clicked on the Software Advice link, I found out that the survey wasn’t by Software Advice. And the survey itself was based on outdated data. This article was published in 2025, and the quoted source was 2017:

Obviously, the survey wasn’t by Software Advice so I followed the link to find the primary source. It led me to a page that doesn’t exist in PRWeb website:

In other words, the data that an article on Google’s first page cited doesn’t exist.

Now, imagine that companies took actions (about upgrading their call center technology) that could cost them thousands of dollars based on wrong, unverified data.

Another problem with AI-generated content when it comes to factual data is that it points at third-party sources, sometimes.

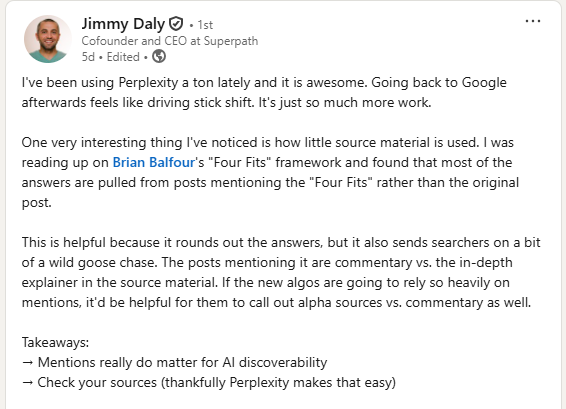

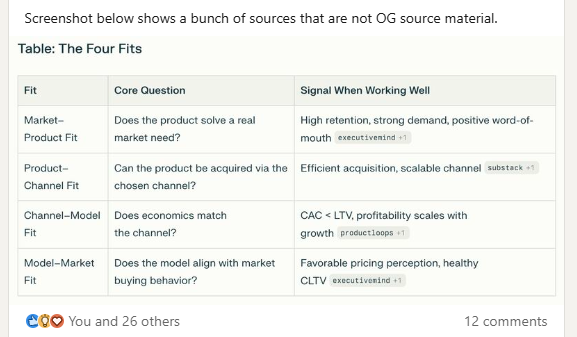

For example, according to Jimmy Daly, a senior content marketer, Perplexity AI is awesome. But for his research on Brian Balfour’s “Four Fits” framework, it pulled answers from posts that mentioned the “Four Fits” framework rather than the original post that discussed the framework:

Here’s the screenshot where Perplexity AI linked to third-party sources and not the original source:

All this is to say that you will always need to fact-check and verify claims by AI before you publish your article.

5. Overdependence on AI

A common pitfall with AI chatbot users is that it erodes their ability to think through a sentence or form an original opinion/argument.

Many users now delegate roles like rewriting their titles, outlines, paragraphs, and even edits to AI.

This is because it’s harder to write from scratch, and easier to prompt an AI tool for the creative work of thinking and writing. And when you reverse engineer this, you’ll realize that it actually takes creative reasoning to be able to prompt an AI tool to provide valuable answers.

In the end, the reliance on AI-generated content (and AI chatbots) produces content that is disconnected from the author.

The rhythm is generic, which may affect how your intended audience will resonate with it.

So, finally, while it’s good to use AI to move faster, your content must start with an idea (or analogy) that belongs to you. If it doesn’t, the content will always sound borrowed, and may later eat into your ability to write coherent paragraphs unassisted.

6. The “AI overlay” hides weak product marketing

An overlooked side effect of AI-led content is that your team may avoid tackling their weak product messaging. That is, if your core message is vague and not specific, AI content can amplify this misalignment, which can hurt your brand.

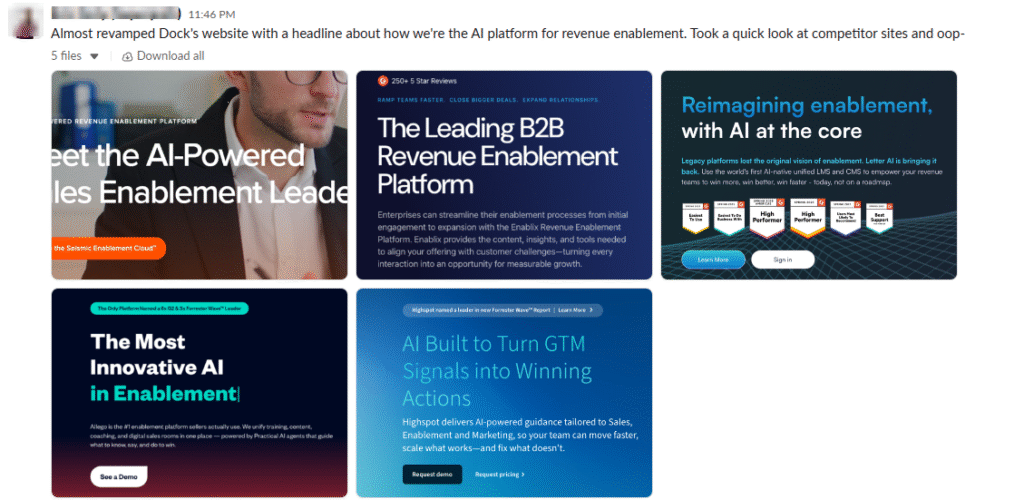

For example, software companies identify themselves as “AI-powered”, without a real, helpful statement on what they do and how they’re different from their competitors.

When a prospect needs a solution for a specific problem and checks their website (or uses an AI tool to learn about them), they won’t find any specific sentence that explains what they do and how. This makes all their marketing strategies fall apart, even before the prospect reads a blog post.

For context, here’s a marketing leader at Dock complaining about the “AI-garbage” in their niche:

In other words, usually when your products are described in vague terms like this, it’s probably because you are yet to clarify what they mean in your context.

AI can’t help you nail your product’s position in your market. It relies on what’s already available online.

And if it’s vague, it’ll affect how users perceive your brand and how AI tools phrase your product.

To fix this, explain, in clear words:

- What your product does (or the other tool it replaces).

- How it delivers value that matters to your target market, and

- What your strongest proof point is (numbers, screenshots, testimonials).

Then use these on your landing pages, homepage, and other high-value pages where you wrote about your product.

Only then should you create content with AI in a way that reflects and supports that position/messaging.

7. AI-generated FAQs take up more space than it should in your blog content

A popular feature in AI visibility (and AI writing) tools is the “People Also Ask” extractor.

It pulls common questions from search engines or chatbot prompts, and suggests you add them as FAQs.

At first glance, this is helpful.

But too often, content teams dump irrelevant FAQs at the bottom of their blog posts, with the hopes that it’ll help them rank in a featured snippet. This leads to a section filled with low-value, low-intent questions like “Is SaaS the future?” or “Why is data important in business?”

For context, an article I read discussed call center technology (the now and the future).

Yet, the FAQs section is filled with unnecessary queries like “what is the future of call center technology”, “will ChatGPT replace call centers”, and “what are the latest call center technologies”. These were basically what was discussed in the blog post:

In summary, most of the FAQs that these AI writing and visibility tools pull are not actual People Also Ask questions. They are phrases that seem to make sense, but in reality are fillers.

And if your page has FAQs that are not related to actual queries your target users have, you’re misusing AI, and it will not help your SEO.

8. AI-generated thought leadership dilutes your POV

We’ve seen many “thought leaders” use AI-generated content to represent their ideas on LinkedIn, X, and in their blog posts.

This is bad because a good thought leadership piece should express the leader’s idea, not just the summary or AI-generated version of the idea.

This is because AI writing tools do not possess the ability to think like a human does. So, when you want to reflect your thoughts, it can easily default to both-sidesism and fence-sitting, which cannot help you argue your stance.

For example, a piece on “remote vs. hybrid work management principles” can easily become a laundry list of pros and cons, rather than a POV that challenges the reader or shows off your company policy.

Compare that to this article from Lars Lofgren on his 10 management principles, which was about how he manages his team in hybrid and remote work environments:

In it, he has ideas such as:

- If Morale is Bad, Put Just One Win on the Board.

- To Get Team Buy-in, Make a Promise and Keep It.

- Break Work into Pieces That Can Be Finished in 2 Weeks or Less.

- Empower Your Team Members to Set Their Own Deadlines.

If he used AI to write the piece, it wouldn’t read the way it reads. And it won’t be helpful to the many people who have shared and quoted his essay on reputable forums.

The bottom line: don’t use AI writing tools for your thought leadership content. Instead, write the way it appeals to you, then hire a human writer or editor to flesh out your ideas and improve your output to make it readable and captivating than an AI chatbot would make it.

Conclusion

The common pitfalls of AI-generated content are easy to miss when you’re committed to pumping out content at scale. But aside from the damage to your SEO, it also affects your brand, as AI-generated content is now easily identified.

Aside from this, AI-generated content may seem confident and helpful at first. But when you query the responses, you may find that they have a flawed and misleading argument. Or in some cases, it’s filled with filler words that don’t say a specific thing.

In the end, what matters is how much you supervise the AI-content creation process. You need to write better prompts. But aside from this, you need to thoroughly review AI-generated content so you can add the human nuance that helps you improve the quality of your content.